事件回顾:

我们收到容器服务告警通知,某个容器应用服务间歇性崩溃重启,该应用是使用Golang语言编写的,部署在阿里云k8s。

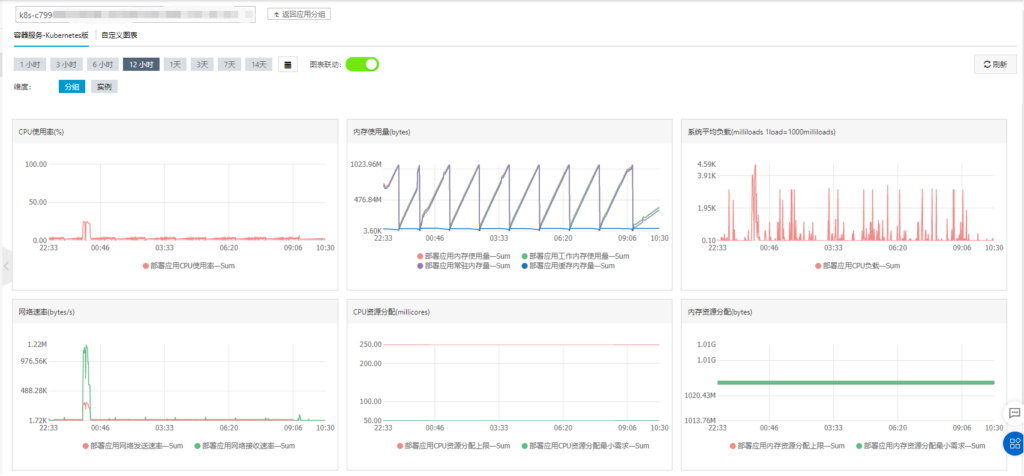

登录阿里云后台,查看容器应用监控指标,发现该应用是因为内存耗尽而崩溃:

从上图可以看出,大约每隔80分钟,应用耗尽内存崩溃重启。该应用部署了多个实例,每个实例内存上限为1G,平时内存占用是比较稳定,只在高峰期有波峰,其它时间几乎一条直线躺平,现在显然是不正常的。

排查步骤:

1、重现问题

把线上代码拉到本地配置模拟环境编译运行,发现在本地也能重现出内存泄漏,这样问题就很好排查了。但比较恶心的是,内存泄漏比较慢,一开始是看不出有啥问题的,要过比较长一段时间才能看出内存有在慢慢上升的,排查问题很耗时间(编译启动应用后,要观察一段时间才能判断应用是否内存泄漏)。

为此我编写了一个简单的脚本监控内存变化:

#!/bin/sh

pid=$1

INTERVAL=600

output="./${pid}.memory.log"

while true

do

DATE=`date +%Y-%m-%dT%H:%M:%S`

echo -n "[${DATE}]" >> ${output}

cat /proc/${pid}/status|grep -e VmRSS >> ${output}

sleep $INTERVAL

done监听结果:

cat 8371.memory.log

[2021-03-08T12:58:38]VmRSS: 18804 kB

[2021-03-08T12:59:38]VmRSS: 20852 kB

[2021-03-08T13:00:38]VmRSS: 22816 kB

[2021-03-08T13:01:38]VmRSS: 26912 kB

[2021-03-08T13:02:38]VmRSS: 28960 kB

[2021-03-08T13:03:38]VmRSS: 31208 kB

[2021-03-08T13:04:38]VmRSS: 35548 kB

[2021-03-08T13:05:38]VmRSS: 37596 kB

[2021-03-08T13:06:38]VmRSS: 39872 kB

[2021-03-08T13:07:38]VmRSS: 41920 kB

[2021-03-08T13:08:38]VmRSS: 46016 kB

[2021-03-08T13:09:38]VmRSS: 48064 kB

[2021-03-08T13:10:38]VmRSS: 50344 kB

[2021-03-08T13:11:38]VmRSS: 52392 kB

[2021-03-08T13:12:38]VmRSS: 56488 kB

[2021-03-08T13:13:38]VmRSS: 58772 kB

[2021-03-08T13:14:38]VmRSS: 61048 kB

[2021-03-08T13:15:38]VmRSS: 63096 kB

[2021-03-08T13:16:38]VmRSS: 66736 kB

[2021-03-08T13:17:38]VmRSS: 69416 kB

[2021-03-08T13:18:38]VmRSS: 78124 kB

[2021-03-08T13:19:38]VmRSS: 80172 kB

[2021-03-08T13:20:38]VmRSS: 84516 kB

[2021-03-08T13:21:38]VmRSS: 84516 kB

[2021-03-08T13:22:38]VmRSS: 88612 kB

[2021-03-08T13:23:38]VmRSS: 90660 kB

[2021-03-08T13:24:38]VmRSS: 92708 kB

[2021-03-08T13:25:38]VmRSS: 96792 kB

[2021-03-08T13:26:38]VmRSS: 98840 kB

[2021-03-08T13:27:38]VmRSS: 101144 kB

[2021-03-08T13:28:38]VmRSS: 103444 kB

[2021-03-08T13:29:38]VmRSS: 105748 kB

[2021-03-08T13:30:38]VmRSS: 109844 kB

[2021-03-08T13:31:38]VmRSS: 111892 kB

[2021-03-08T13:32:38]VmRSS: 113940 kB

[2021-03-08T13:33:38]VmRSS: 115988 kB结果显示内存占用在不断攀升。

2、定位导致异常的代码。

在此期间已有多人提交分支代码并上线,commit比较多,不清楚到底是哪个提交代码导致问题的。因此先定位导致出现问题的代码在哪里,这里简单粗暴使用git bisect进行二分法定位。大概原理是:先回滚至前80个提交,测试发现没有内存泄漏,再回滚到前40个提交,没发现问题,再回滚至前20个提交,出现内存泄漏。OK,导致问题的代码在前40到前20的提交之间,范围缩小了。随后再次在40到20之间进行二分法,直到找到最终的有问题的代码提交,然后跟前一个代码提交进行diff对比,即可知道修改了哪些代码导致出现问题。先找出最近正常的提交, 例如7天前是正常的,查找7天前的记录,然后回滚,编译运行检查是否正常。

git branch xxx

git checkout xxx

git log --pretty=format:"%h,%an,%ar : %s" --before="2021-02-25"

git reset --hard 8d41fdee6

make run找到最近的正常提交后,就可以使用git bisect定位出问题的提交了:

#1 开始使用git bisect

git bisect start

#2 记录当前提交记录为异常的提交

git bisect bad

#3 已知提交8d41fdee6是正常的(没有内存泄漏),标记该提交为正常提交

git bisect good 8d41fdee6

Bisecting: 1261 revisions left to test after this (roughly 10 steps)

[9cfecc52542bbfe6d21ceab86c0cf868a6718a19] Merge branch 'feat/er-v2.14.0-batch' into test

#5 此时git会提示已回滚至一个中间节点,编译运行再检查是good还是bad,然后选择执行对应的命令:

git bisect good [commitID]

git bisect bad[commitID]

#6 找到后会提示:

[commitID] is first bad commit

#7 找到后恢复之前状态

git bisect reset

#8 review差异代码,commitID1是正常的提交,commitID2是紧邻的异常提交

git diff [commitID1] [commitID2]3、使用性能剖析工具pprof分析内存

go tool pprof https://***/api/debug/pprof/heap

top 20 -cum

top 20 -flat

go tool pprof https://***/api/debug/pprof/heap

Fetching profile over HTTP from https://***/api/debug/pprof/heap

Saved profile in /home/tenhan/pprof/pprof.server.alloc_objects.alloc_space.inuse_objects.inuse_space.009.pb.gz

File: server

Type: inuse_space

Time: Mar 4, 2021 at 12:01pm (HKT)

Entering interactive mode (type "help" for commands, "o" for options)

(pprof) top 20 -cum

Showing nodes accounting for 166.04MB, 67.89% of 244.56MB total

Dropped 67 nodes (cum <= 1.22MB)

Showing top 20 nodes out of 71

flat flat% sum% cum cum%

0 0% 0% 192.54MB 78.73% github.com/apache/rocketmq-client-go/v2/primitive.WithRecover

13.50MB 5.52% 5.52% 100.52MB 41.10% github.com/apache/rocketmq-client-go/v2/internal.GetOrNewRocketMQClient.func2

0 0% 5.52% 100.52MB 41.10% github.com/apache/rocketmq-client-go/v2/internal/remote.(*remotingClient).processCMD.func2

0 0% 5.52% 92.02MB 37.63% github.com/apache/rocketmq-client-go/v2/internal/remote.(*remotingClient).connect.func1

0.50MB 0.2% 5.73% 92.02MB 37.63% github.com/apache/rocketmq-client-go/v2/internal/remote.(*remotingClient).receiveResponse

36.01MB 14.73% 20.45% 91.52MB 37.42% github.com/apache/rocketmq-client-go/v2/internal/remote.decode

34.01MB 13.91% 34.36% 87.01MB 35.58% github.com/apache/rocketmq-client-go/v2/primitive.DecodeMessage

9MB 3.68% 38.04% 55.01MB 22.49% github.com/apache/rocketmq-client-go/v2/internal/remote.(*jsonCodec).decodeHeader

0 0% 38.04% 46.01MB 18.81% github.com/json-iterator/go.(*Iterator).ReadVal

0 0% 38.04% 46.01MB 18.81% github.com/json-iterator/go.(*frozenConfig).Unmarshal

0 0% 38.04% 46.01MB 18.81% github.com/json-iterator/go.(*generalStructDecoder).Decode

0 0% 38.04% 46.01MB 18.81% github.com/json-iterator/go.(*generalStructDecoder).decodeOneField

0 0% 38.04% 46.01MB 18.81% github.com/json-iterator/go.Unmarshal (inline)

0 0% 38.04% 44.51MB 18.20% github.com/json-iterator/go.(*mapDecoder).Decode

0 0% 38.04% 44.51MB 18.20% github.com/json-iterator/go.(*structFieldDecoder).Decode

43.01MB 17.59% 55.62% 43.01MB 17.59% github.com/apache/rocketmq-client-go/v2/primitive.(*Message).UnmarshalProperties

30.01MB 12.27% 67.89% 30.01MB 12.27% runtime.malg

0 0% 67.89% 30.01MB 12.27% runtime.mstart

0 0% 67.89% 30.01MB 12.27% runtime.newproc.func1

0 0% 67.89% 30.01MB 12.27% runtime.newproc1

(pprof)

(pprof) top 20 -flat

Active filters:

ignore=flat

Showing nodes accounting for 238.52MB, 97.53% of 244.56MB total

Dropped 67 nodes (cum <= 1.22MB)

Showing top 20 nodes out of 71

flat flat% sum% cum cum%

43.01MB 17.59% 17.59% 43.01MB 17.59% github.com/apache/rocketmq-client-go/v2/primitive.(*Message).UnmarshalProperties

36.01MB 14.73% 32.31% 91.52MB 37.42% github.com/apache/rocketmq-client-go/v2/internal/remote.decode

34.01MB 13.91% 46.22% 87.01MB 35.58% github.com/apache/rocketmq-client-go/v2/primitive.DecodeMessage

30.01MB 12.27% 58.49% 30.01MB 12.27% runtime.malg

29.51MB 12.07% 70.55% 29.51MB 12.07% reflect.mapassign

16.50MB 6.75% 77.30% 16.50MB 6.75% github.com/json-iterator/go.(*Iterator).ReadString

13.50MB 5.52% 82.82% 100.52MB 41.10% github.com/apache/rocketmq-client-go/v2/internal.GetOrNewRocketMQClient.func2

9MB 3.68% 86.50% 55.01MB 22.49% github.com/apache/rocketmq-client-go/v2/internal/remote.(*jsonCodec).decodeHeader

7.18MB 2.94% 89.44% 7.18MB 2.94% bytes.makeSlice

7MB 2.86% 92.30% 7MB 2.86% fmt.Sprintf

3MB 1.23% 93.53% 3MB 1.23% strings.(*Builder).grow (inline)

2.50MB 1.02% 94.55% 2.50MB 1.02% regexp.onePassCopy

2.50MB 1.02% 95.57% 2.50MB 1.02% sync.runtime_Semacquire

1.78MB 0.73% 96.30% 1.78MB 0.73% github.com/mozillazg/go-pinyin.init

1.50MB 0.61% 96.92% 1.50MB 0.61% regexp/syntax.(*compiler).inst

1MB 0.41% 97.33% 1.50MB 0.61% github.com/jinzhu/gorm.(*Scope).GetModelStruct

0.50MB 0.2% 97.53% 92.02MB 37.63% github.com/apache/rocketmq-client-go/v2/internal/remote.(*remotingClient).receiveResponse

0 0% 97.53% 7.18MB 2.94% bytes.(*Buffer).WriteString

0 0% 97.53% 7.18MB 2.94% bytes.(*Buffer).grow

0 0% 97.53% 7.68MB 3.14% encoding/json.(*Encoder).Encode

(pprof)结果说明:

flat--- 函数占的内存

flat% --- 函数相对于总内存的百分比

sum% --- 前面累计每一行flat 占比

cum --- 函数以及函数调用的其他函数总内存

cum --- 对应的百分比从内存分析的数据可以看出,github.com/apache/rocketmq-client-go/v2/primitive.WithRecover 这个方法居然这么耗内存,足足有上百兆,因此可以断定内存泄漏与rocketmq的SDK有关。

再监控一下goroutine:

http://***/debug/pprof/goroutine?debug=1

goroutine profile: total 20452

20410 @ 0x43bbc5 0x4076ea 0x407495 0x7e8490 0x662389 0x652149 0x470881

# 0x7e848f github.com/apache/rocketmq-client-go/v2/internal.GetOrNewRocketMQClient.func2+0x24f /home/tenhan/go/pkg/mod/github.com/apache/rocketmq-client-go/v2@v2.0.0/internal/client.go:220

# 0x662388 github.com/apache/rocketmq-client-go/v2/internal/remote.(*remotingClient).processCMD.func2+0x68 /home/tenhan/go/pkg/mod/github.com/apache/rocketmq-client-go/v2@v2.0.0/internal/remote/remote_client.go:207

# 0x652148 github.com/apache/rocketmq-client-go/v2/primitive.WithRecover+0x48 /home/tenhan/go/pkg/mod/github.com/apache/rocketmq-client-go/v2@v2.0.0/primitive/base.go:96

5 @ 0x43bbc5 0x4343db 0x46b075 0x4d42e5 0x4d5325 0x4d5303 0x5e266f 0x5f63ee 0x4d0567 0x6607db 0x6607aa 0x662253 0x652149 0x470881

# 0x46b074 internal/poll.runtime_pollWait+0x54 /usr/local/go/src/runtime/netpoll.go:222

# 0x4d42e4 internal/poll.(*pollDesc).wait+0x44 /usr/local/go/src/internal/poll/fd_poll_runtime.go:87

# 0x4d5324 internal/poll.(*pollDesc).waitRead+0x1a4 /usr/local/go/src/internal/poll/fd_poll_runtime.go:92

# 0x4d5302 internal/poll.(*FD).Read+0x182 /usr/local/go/src/internal/poll/fd_unix.go:159

# 0x5e266e net.(*netFD).Read+0x4e /usr/local/go/src/net/fd_posix.go:55

# 0x5f63ed net.(*conn).Read+0x8d /usr/local/go/src/net/net.go:182

# 0x4d0566 io.ReadAtLeast+0x86 /usr/local/go/src/io/io.go:314

# 0x6607da io.ReadFull+0x11a /usr/local/go/src/io/io.go:333

# 0x6607a9 github.com/apache/rocketmq-client-go/v2/internal/remote.(*remotingClient).receiveResponse+0xe9 /home/tenhan/go/pkg/mod/github.com/apache/rocketmq-client-go/v2@v2.0.0/internal/remote/remote_client.go:158

# 0x662252 github.com/apache/rocketmq-client-go/v2/internal/remote.(*remotingClient).connect.func1+0x32 /home/tenhan/go/pkg/mod/github.com/apache/rocketmq-client-go/v2@v2.0.0/internal/remote/remote_client.go:133

# 0x652148 github.com/apache/rocketmq-client-go/v2/primitive.WithRecover+0x48 /home/tenhan/go/pkg/mod/github.com/apache/rocketmq-client-go/v2@v2.0.0/primitive/base.go:96

居然有2万个goroutine!!!这是把内存耗尽的真正的罪魁祸首。

4、 review代码。

一般在这个阶段,基本上能找出大部分问题。我们发现在出问题的代码中,使用了SDK是Apache官方的RocketMQ SDK ,版本为v2.0,0,GitHub地址为:https://github.com/apache/rocketmq-client-go

不幸的是,通过代码对比,也找同事一起review确认,使用方法跟官方文档的一致,并看不出有什么问题。而且执行demo也是正常的。

那会不会是SDK的这个版本版本存在内存泄漏?我到github仓库查找相关的issue和PR,真的找到了:https://github.com/apache/rocketmq-client-go/issues/307

https://github.com/apache/rocketmq-client-go/issues/561

实际上并不是内存泄漏,而是生产者客户端启用大量的goroutine导致的,跟上面pprof分析出来的数据差不多,也是因为存在大量的goroutine导致内存被耗尽。遗憾的是,这几个issue并没有人搭理。于是打破砂锅问到底的我决定要一探究竟是什么原因导致发生内存泄漏。

6、修复SDK

先在GitHub上fork代码

基操一波:

git clone 你fork的仓库代码

cd rocketmq-client-go

git checkout -b fix/xxxx

vim producer/producer.go

git add producer/producer.go

git commit -s -v

git push(上面涉及到github的ssh认证,gpg签名,这里不赘述)

OK,我们可以在项目中使用自己修改过的SDK了。编辑go.mod文件,添加替换规则:

replace github.com/apache/rocketmq-client-go/v2 v2.0.0 => github.com/你的版本库/v2 38f77f638f77f6 是你提交的commit id

go mod tody 把依赖下载下来。

进入debug模式,添加环境变量:ROCKETMQ_GO_LOG_LEVEL=debug

这个变量我是从源码中找到的:github.com/apache/rocketmq-client-go/v2/rlog/log.go

func init() {

r := &defaultLogger{

logger: logrus.New(),

}

level := os.Getenv("ROCKETMQ_GO_LOG_LEVEL")

switch strings.ToLower(level) {

case "debug":

r.logger.SetLevel(logrus.DebugLevel)

case "warn":

r.logger.SetLevel(logrus.WarnLevel)

case "error":

r.logger.SetLevel(logrus.ErrorLevel)

default:

r.logger.SetLevel(logrus.InfoLevel)

}

rLog = r

}这里我在SDK加了很多埋点日志,例如在github.com/apache/rocketmq-client-go/v2/internal/client.go文件中:

client.remoteClient.RegisterRequestFunc(ReqCheckTransactionState, func(req *remote.RemotingCommand, addr net.Addr) *remote.RemotingCommand {

// ...此处已省略一堆代码

rlog.Debug("before callbackCh <- callback",nil)

callbackCh <- callback

rlog.Debug("aftercallbackCh <- callback",nil)

return nil

})这段代码注册了一个对事务进行检查确认的处理方法,日志结果为:

DEBU[0027] before callbackCh <- callback

DEBU[0027] before callbackCh <- callback

DEBU[0027] before callbackCh <- callback

DEBU[0027] before callbackCh <- callback

DEBU[0027] before callbackCh <- callback

DEBU[0027] before callbackCh <- callback

DEBU[0027] before callbackCh <- callback

DEBU[0027] before callbackCh <- callback通过日志发现,after callbackCh <- callback永远不会被打印,这个方法是在goroutine里执行的,也就是说,所有goroutine都阻塞在这里了:callbackCh <- callback,因此goroutine数量只会不断地增加,却从不会结束。

经过一番代码追踪,进一步的原因找到了,竟然没有任何goroutine对callbackCh进行消费!而callbackCh是没有缓存的chan,因此任何试图往callbackCh发送消息的地方都会阻塞。对callbackCh进行消费的代码是在TransactionProducer实例调用Start()方法后才启用的,而现在我使用的是DefaultProducer实例,是没有事务功能的。

现在只需要把RocketMQ中未提交确认的事务消息进行答复确认即可,然后就不会不断询问客户端了,但是又出现了新的问题,客户端无法正确答复!

这里额外说明一下:

MySQL的事务有超时机制的,当客户端开启事务后并没有commit事务,然后断开连接,服务端会自动回滚。但RocketMQ的事务流程是这样的:

1、发送prepare消息(其实就是普通消息,只是加了一些字段标识)

2、执行本地事务

3、根据本地事务执行情况,向MQ提交消息确认是成功提交或失败回滚

4、若MQ未收到确认指令,或者发现客户端断开连接了,服务端会耐心等待客户端再次连接,然后再向客户端询问本地事务状态,并做补偿处理。

关键在于第4点,如果有一个事务消息服务端没有得到确认,服务端就会不断询问客户端,客户端重启了也会收到之前的询问,收到询问后客户端会新增一个goroutine去异步答复这个询问。是的,如果客户端答复后,这个事务就结束了,不会出现不断询问的请求。但事实却没有这样美好,对于这个消息事务,客户端无法正确答复!

原因在于:提交消息确认的消息传递的TransactionID服务端认为是非法的,我把相关的消息体打印出来,发现有2个不同的事务ID:

unknow msg:

[Message=[topic=MQ_INST_1133533817523848_BXZSV0Z1%test_topic1, body=Hello RocketMQ Go Client!, Flag=0, properties=map[MSG_REGION:cn-qingdao-publictest PGROUP:DEFAULT_CONSUMER TRACE_ON:true UNIQ_KEY:0A112BDD660800000000564213b80090 __transactionId__:9256a674866b14d1f27eafc792016c5d], TransactionId=0A112BDD660800000000564213b80090], MsgId=0A112BDD660800000000564213b80090, OffsetMsgId=0BC1F0C100002A9F000000007AA5A759,QueueId=0, StoreSize=325, QueueOffset=0, SysFlag=4, BornTimestamp=1608199171538, BornHost=101.200.170.8:0, StoreTimestamp=0, StoreHost=11.193.240.193:10911, CommitLogOffset=2057676633, BodyCRC=729402843, ReconsumeTimes=0, PreparedTransactionOffset=0], return CommitDEBU[0002] GetOrNewRocketMQClient.ReqCheckTransactionState msgExt="[Message=[topic=MQ_INST_1133533817523848_BXZSV0Z1%test_topic1, body=Hello RocketMQ Go Client!, Flag=0, properties=map[MSG_REGION:cn-qingdao-publictest PGROUP:DEFAULT_CONSUMER TRACE_ON:true UNIQ_KEY:0A112BDD660800000000564196b80070 __transactionId__:a8c2bb6b1e118b2a958cc0c175fbe6c9], TransactionId=], MsgId=0A112BDD660800000000564196b80070, OffsetMsgId=0BC1F0C100002A9F00000000771F8EBE,QueueId=0, StoreSize=325, QueueOffset=0, SysFlag=4, BornTimestamp=1608199139492, BornHost=101.200.170.8:0, StoreTimestamp=0, StoreHost=11.193.240.193:10911, CommitLogOffset=1998556862, BodyCRC=729402843, ReconsumeTimes=0, PreparedTransactionOffset=0]"

msgExt.TransactionId=注意这两个:__transactionId__:9256a674866b14d1f27eafc792016c5dTransactionId=0A112BDD660800000000564213b80090

由于我们使用的RocketMQ的阿里云自己魔改的版本,我找过运维询问过,他无法帮我获得对应的版本号。而Apache开源的RocketMQ使用的是TransactionId,通过其它代码的SDK对比确认,阿里云RocketMQ使用的是__transactionId__

Java版的SDK:https://github.com/apache/rocketmq

rocketmq/client/src/main/java/org/apache/rocketmq/client/impl/producer/DefaultMQProducerImpl.java

if (sendResult.getTransactionId() != null) {

msg.putUserProperty("__transactionId__", sendResult.getTransactionId());

}整个问题豁然开朗:

1、使用了阿里云的RocketMQ,对应的事务ID是__transactionId__。

2、RocketMQ中存在未得到确认答复的事务消息,因此服务端会不断询问客户端确认这个事务消息。

3、客户端使用的是Apache的SDK,对事务使用的是TransactionId,因此无法正确答复,服务端不断询问,客户端不断新建goroutine处理并把该询问投递至callbackCh(这个goroutine在这里阻塞)。

4、客户端启动的不是事务Producer,所以不会消费callbackCh,导致所有投递消息至callbackCh的goroutine阻塞,由于没有消费callbackCh,goroutine不断上升到数十万个已上,直至耗尽内存。

修复办法:

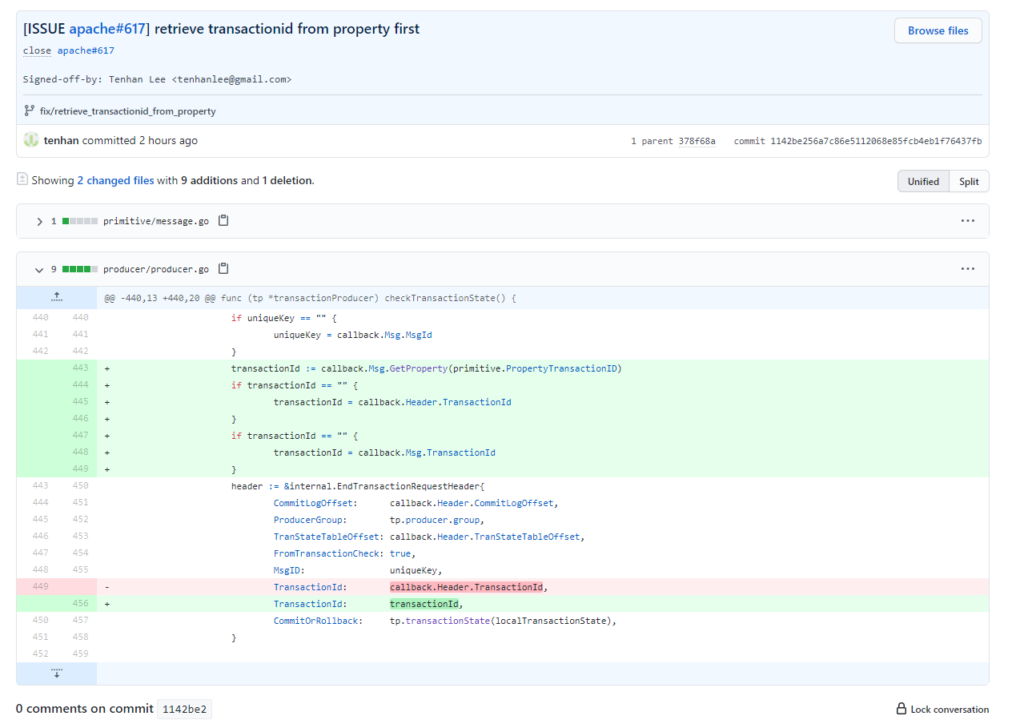

由于Apache的RocketMQ并没有使用__transactionId__,因此优先获取__transactionId__,获取不到再使用TransactionId,不会有负面影响。

修改SDK代码:

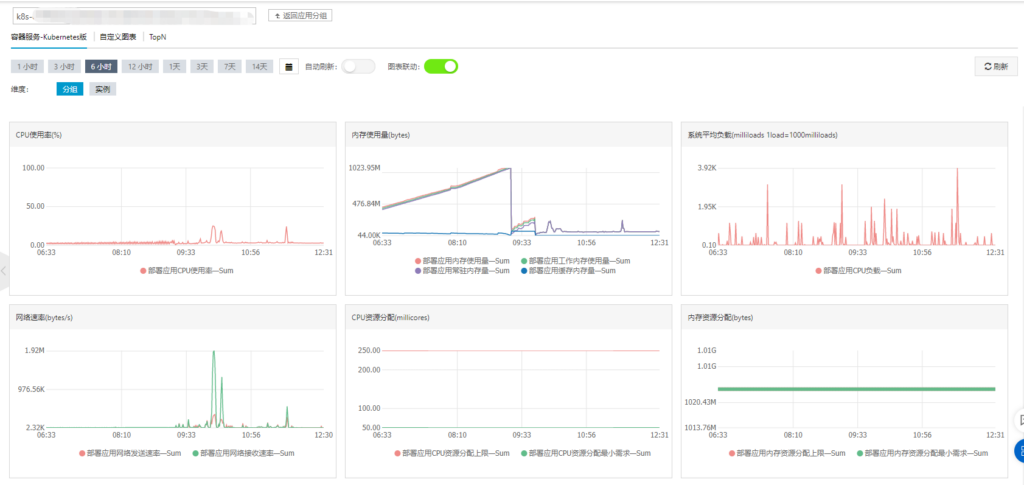

更新SDK跑一下,所有事务消息得以正确提交确认。再看一下监控:

OK,内存不再是一条上升的斜线,至此,内容泄露问题得以解决。

上述修复代码已于2021-03-16被作者合进主分支(https://github.com/apache/rocketmq-client-go/pull/620),并已经在版本2.1.0中release,如遇到类似问题,将SDK版本升级至2.1.0或以上版本即可。

2021-03-10 广州